Patroni를 이용하여 PostgreSQL HA 구성

0. 구성환경

| ip | hostname | 설치 제품 |

| 192.168.56.201 | pgserver1 | Cent7 minimal, PostgreSQL 13, patroni |

| 192.168.56.202 | pgserver2 | Cent7 minimal, PostgreSQL 13, patroni |

| 192.168.56.203 | pgserver3 | Cent7 minimal, etcd, HAproxy |

기본적으로 위의 정보는 /etc/hosts와 /etc/hostname에 설정이 되어 있어야 하고, SELINUX=disabled 된 상태 (sed -i s/SELINUX=enforcing/SELINUX=disabled/ /etc/selinux/config)

PG 서버는 source 버전을 이용하여 컴파일 하여 사용

patroni : PostgreSQL의 관리를 하는 python 기반의 프로그램. Auto failover, pg_rewind를 이용한 replication 재 구성

etcd : key-value구조를 가지는 데이터베이스로 patroni에서 사용하는 메타 데이터와 patroni에서 전송되는 상태 값 저장. 이 값을 patroni에서 참조하여 장애 조치 또는 auto failover를 수행 함

HAproxy : patroni와 통신 하면서 현재의 Primary에 대한 정보를 받아와서 Client로의 연결을 Primary로 연결 시켜주는 라우터 역할

repmgr 또는 EFM은 각각의 데몬끼리 서로 통신하면서 상태값을 체크하면서 auto fail-over를 수행하는 반면, patroni는 상태값을 etcd에 저장하고 이 값을 참조하여 장애 조치나 failover를 수행

patroni 초기 기동 시 etcd와의 연결이 되지 않으면 initdb도 수행되지 않음

그래서 etcd를 3개 이상으로 구성하는 것을 권장함

patroni는 PostgreSQL 유저와 동일한 O/S로 기동

etcd, HAproxy는 동일 유저 아니어도 상관 없음

여기 테스트에서는 1개의 etcd, HAproxy로 구성하여 테스트 진행

1. PostgreSQL 설치 (pgserver1, pgserver2)

PostgreSQL source 버전을 다운 받아서 설치 진행

| yum -y install epel-release yum -y install gcc gcc-c++ make autoconf readline readline-devel zlib zlib-devel openssl openssl-devel gettext gettext-devel python python-devel wget useradd postgres mkdir /engine /data chwon -R postgres:postgres /engine /data postgres 유저로 소스파일 압축 푼 디렉터리로 이동하여 컴파일 진행 ./configure --prefix=/engine/pg13 make make world make install make install-world postgres 유저의 .bash_profile에 /engine/pg13/bin 패스 추가 |

2. patroni 설치 (pgserver1, pgserver2)

root유저로 설치를 진행하는데, 파이썬에서 사용하는 psycopg2 컴파일 시에 postgresql 라이브러리를 참조하여서 컴파일이 진행 되므로

root유저의 path에 /engine/pg13/bin:/engine/pg13/lib:/engine/pg13/include 추가 후 적용

적용 후 which psql로 정상적으로 PATH가 적용 되었는지 확인 후 진행

| yum -y install groupinstall "Development Tools" yum -y install gcc python3 python3-devel python3-pip gcc libpq-devel which pip3로 설치 확인 pip3 install patroni pip3 install python-etcd pip3 install psycopg2 which patroni로 patroni 설치 확인 후 patroni --version 으로 patroni가 정상 실행 되는지 확인 --> 여기서 에러 발생 할 경우 --> pip3 uninstall psycopg2 --> yum -y install postgresqlxx-libs (postgreSQL 버전에 맞는 파일 설치) --> pip3 install psycopg2 --> patroni --version 으로 다시 확인 |

3. etcd 설치

pgserver3 서버에 root로 etcd 설치 진행

etcd가 patroni 보다 먼저 기동되어 있어야 함

| dnf를 이용하여 설치 진행 시 | 파일을 다운받아서 설치 진행 시 |

| yum -y install epel-release yum -y install dnf dnf install -y etcd which etcd etcd --version |

https://github.com/etcd-io/etcd/releases/tag/v3.5.11 에서 다운로드 후 tar xvfz etcd-v3.5.11-linux-amd64.tar.gz mv etcd-v3.5.11-linux-amd64 etcd-v3.5.11 /engine/etcd-v3.5.11/etcd --version etcd Version: 3.5.11 Git SHA: 3b252db4f Go Version: go1.20.12 Go OS/Arch: linux/amd64 |

| etcd config 파일 작성 |

| echo "name: 'pgserver3' data-dir: '/data/etcd/pgserver3.etcd' listen-peer-urls: 'http://192.168.56.203:2380,http://127.0.0.1:2380' listen-client-urls: 'http://127.0.0.1:2379,http://192.168.56.203:2379' advertise-client-urls: 'http://192.168.56.203:2379' initial-cluster: 'pgserver3=http://192.168.56.203:2380' initial-advertise-peer-urls: 'http://192.168.56.203:2380' initial-cluster-state: 'new' initial-cluster-token: 'node1' enable-v2: true" > /engine/etcd-v3.5.11/etcd.yml |

| etcd 기동 |

| /engine/etcd-v3.5.11/etcd --config-file /engine/etcd-v3.5.11/etcd.yml > /engine/etcd-v3.5.11/etcd.log 2>&1 & 또는 etcd --config-file /engine/etcd-v3.5.11/etcd.yml > /tmp/etcd.log 2>&1 & |

| etcd 기동 확인 |

| /engine/etcd-v3.5.11/etcdctl member list -w table +------------------+---------+-------+----------------------------+----------------------------+------------+ | ID | STATUS | NAME | PEER ADDRS | CLIENT ADDRS | IS LEARNER | +------------------+---------+-------+----------------------------+----------------------------+------------+ | 1bf71b8455ab493f | started | etcd1 | http://192.168.56.203:2380 | http://192.168.56.203:2379 | false | +------------------+---------+-------+----------------------------+----------------------------+------------+ /engine/etcd-v3.5.11/etcdctl endpoint health -w table +----------------+--------+-------------+-------+ | ENDPOINT | HEALTH | TOOK | ERROR | +----------------+--------+-------------+-------+ | 127.0.0.1:2379 | true | 93.882665ms | | +----------------+--------+-------------+-------+ /engine/etcd-v3.5.11/etcdctl endpoint status -w table +----------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+ | ENDPOINT | ID | VERSION | DB SIZE | IS LEADER | IS LEARNER | RAFT TERM | RAFT INDEX | RAFT APPLIED INDEX | ERRORS | +----------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+ | 127.0.0.1:2379 | 1bf71b8455ab493f | 3.5.11 | 20 kB | true | false | 4 | 9 | 9 | | +----------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+ |

4. patroni 기동

pgserver1, pgserver2에서 postgres 유저로 기동

postgreSQL서버를 rpm으로 설치 후 서비스가 기동되어 있으면, 해당 서비스 종료 후, 서비스 disable 한 후 진행

데이터 디렉터리 여기서는 /data/pg13인데 /data 디렉터리 까지만 생성해 놓고 권한을 postgres 유저에게 주고서 실행해야 한다

/data/pg13까지 만들어 놓을 경우 patroni가 initial을 진행 할때 해당 디렉터리 /data/pg13의 권한을 변경하려고 하면서 제대로 변경이 되지 않는 경우 에러 발생

/data 까지만 만들어 놓고 chown -R postgres:postgres /data로 해 놓은 상태에서 패트로니 초기 기동

scope : patroni cluster이름( 여기서는 postgres로 지정, 이후 명령어에 postgres가 나오는 부분은 클러스터명을 지정한 것임)

name : patroni cluster내에서 유니크한 이름

etcd : etcd 서버 정보. etcd 서버가 여러대의 클러서터로 구성 되어 있으면 컴마로 구분하여 여러 IP 지정

pg_hba 부분에서는 해당 버전에 맞는 값으로 변경 md5 --> scram-sha-256

| pgserver1 | pgserver2 |

| echo "scope: postgres name: pgsvr1 restapi: listen: '192.168.56.201:8008' connect_address: '192.168.56.201:8008' etcd: hosts: '192.168.56.203:2379' bootstrap: dcs: ttl: 30 loop_wait: 10 retry_timeout: 10 maximum_lag_on_failover: 1048576 postgresql: use_pg_rewind: true parameters: max_wal_size: '1G' max_wal_senders: 8 initdb: - encoding: UTF8 - data-checksums pg_hba: - host replication repl 192.168.56.201/32 md5 - host replication repl 192.168.56.202/32 md5 - host all all 0.0.0.0/0 md5 users: admin: password: admin options: - createrole - createdb postgresql: listen: '192.168.56.201:5413' connect_address: '192.168.56.201:5413' data_dir: /data/pg13 pgpass: /tmp/pgpass0 authentication: replication: username: repl password: repl superuser: username: postgres password: postgres rewind: username: rewind_user password: rewind_user parameters: unix_socket_directories: . tags: nofailover: false noloadbalance: false clonefrom: false nosync: false" > /engine/patroni.yml |

echo "scope: postgres name: pgsvr2 restapi: listen: '192.168.56.202:8008' connect_address: '192.168.56.202:8008' etcd: hosts: '192.168.56.203:2379' bootstrap: dcs: ttl: 30 loop_wait: 10 retry_timeout: 10 maximum_lag_on_failover: 1048576 postgresql: use_pg_rewind: true parameters: max_wal_size: '1G' max_wal_senders: 8 initdb: - encoding: UTF8 - data-checksums pg_hba: - host replication repl 192.168.56.201/32 md5 - host replication repl 192.168.56.202/32 md5 - host all all 0.0.0.0/0 md5 users: admin: password: admin options: - createrole - createdb postgresql: listen: '192.168.56.202:5413' connect_address: '192.168.56.202:5413' data_dir: /data/pg13 pgpass: /tmp/pgpass0 authentication: replication: username: repl password: repl superuser: username: postgres password: postgres rewind: username: rewind_user password: rewind_user parameters: unix_socket_directories: . tags: nofailover: false noloadbalance: false clonefrom: false nosync: false" > /engine/patroni.yml |

| /usr/local/bin/patroni /engine/patroni.yml > /engine/patroni.log 2>&1 & | /usr/local/bin/patroni /engine/patroni.yml > /engine/patroni.log 2>&1 & |

etcd와 patroni가 정상적으로 연결이 되면, patroni는 설정 파일 값을 읽어서 PostgreSQL replication을 자동으로 구성 한다

etcd와 통신이 되지 않으면 patroni는 아무런 동작을 하지 못 한다

patroni 상태 체크

| /usr/local/bin/patronictl -c /engine/patroni.yml list + Cluster: postgres (7321565912410233191) --+-----------+----+-----------+ | Member | Host | Role | State | TL | Lag in MB | +-----------+---------------------+---------+-----------+----+-----------+ | pgsvr1 | 192.168.56.201:5413 | Leader | running | 1 | | | pgsvr2 | 192.168.56.202:5413 | Replica | streaming | 1 | 0 | +-----------+---------------------+---------+-----------+----+-----------+ |

state 값이 starting 또는 TL 값이 Reader와 동일하지 않은 경우 해당 서버의 동기화 상태가 이상이 있는 것이므로 PostgreSQL로그를 확인하여 해당 노드를 수동으로 재 구성해 주어야 할 수도 있다

실제 Patroni를 강제 종료 하고, PostgreSQL을 강제 종료 하는 등의 테스트를 진행하다 보니, Promotion 되면서 wal log들이 없다고 하면서 동기화가 깨지는 경우들이 발생 함

물론 운영 환경에서는 그런 경우가 없겠지만...

해당 경우에는 pause 명령을 이용하여 관리를 수동으로 전환한 후에 문제가 있는 서버의 PostgreSQL을 수동으로 종료 후 $PGDATA 디렉터리를 삭제 하고 resume 명령을 수행하면 된다

(이 경우는 내부적으로 pg_basebackup을 사용하여 복구)

4.1 switch over 수행

patroni를 이용하여 switch over 수행

reder나 replica 아무 곳에서 수행해도 됨

| /usr/local/bin/patronictl -c /engine/patroni.yml switchover |

Reader와 Replica Role이 바뀌면서 TL이 2로 변경 된 것 확인 가능

4.2 Reader 변경 히스토리 확인

patroni가 자동으로 failover를 수행했거나, 수동 switchover를 수행하여서 Reader가 변경 된 경우

해당 변경 히스토리를 확인 할 수 있다

| /usr/local/bin/patronictl -c /engine/patroni.yml history postgres +----+----------+------------------------------+----------------------------------+------------+ | TL | LSN | Reason | Timestamp | New Leader | +----+----------+------------------------------+----------------------------------+------------+ | 1 | 50549776 | no recovery target specified | 2024-01-08T13:00:31.621876+09:00 | pgsvr2 | | 2 | 50717520 | no recovery target specified | 2024-01-08T13:14:54.865611+09:00 | pgsvr1 | | 3 | 51162816 | no recovery target specified | 2024-01-08T13:43:41.631876+09:00 | pgsvr2 | | 4 | 51163096 | no recovery target specified | 2024-01-08T13:46:24.821611+09:00 | pgsvr1 | | 5 | 51272632 | no recovery target specified | 2024-01-09T10:22:37.616876+09:00 | pgsvr2 | | 6 | 67109024 | no recovery target specified | | | +----+----------+------------------------------+----------------------------------+------------+ |

4.3 파라미터 변경

patroni.yml에 여러 설정을 해 줄 수 없으므로 PostgreSQL 클러스터가 구성 된 이후에 변경 가능

필요한 내용을 postgresql 밑의 parameter 부분에 추가 한 후에 저장 후 반영 (reload, restart)

파라미터 = 값 이 아니라 파라미터: 값으로 설정 해야 함

| /usr/local/bin/patronictl -c /engine/patroni.yml edit-config loop_wait: 10 maximum_lag_on_failover: 1048576 postgresql: parameters: max_wal_senders: 8 max_wal_size: 1G use_pg_rewind: true retry_timeout: 10 ttl: 30 |

| +++ @@ -4,6 +4,23 @@ parameters: max_wal_senders: 8 max_wal_size: 1G + max_connections: 500 + port: 5413 + shared_buffers: 1G + logging_collector: on + log_destination: 'stderr' + log_directory: 'log' + log_filename: 'postgresql-%Y-%m-%d.log' + log_checkpoints: on + log_connections: on + log_disconnections: on + log_lock_waits: on + log_temp_files: 0 + log_min_duration_statement: 500 + log_line_prefix: '%t [%p]: db=%d,user=%u,app=%a,client=%h ' + shared_preload_libraries: 'pg_stat_statements' + pg_stat_statements.max: 10000 + pg_stat_statements.track: all use_pg_rewind: true retry_timeout: 10 ttl: 30 Apply these changes? [y/N]: y Configuration changed |

| patronictl [reload|restart|pause|resume] [OPTIONS] CLUSTER_NAME [MEMBER_NAMES] patronictl -c /engine/patroni.yml restart postgres + Cluster: postgres (7321565912410233191) ---------+----+-----------+-----------------+ | Member | Host | Role | State | TL | Lag in MB | Pending restart | +--------+---------------------+---------+---------+----+-----------+-----------------+ | pgsvr1 | 192.168.56.201:5413 | Leader | running | 6 | | * | | pgsvr2 | 192.168.56.202:5413 | Replica | running | 6 | 0 | * | +--------+---------------------+---------+---------+----+-----------+-----------------+ When should the restart take place (e.g. 2024-01-09T17:24) [now]: Are you sure you want to restart members pgsvr1, pgsvr2? [y/N]: y Restart if the PostgreSQL version is less than provided (e.g. 9.5.2) []: Success: restart on member pgsvr1 Success: restart on member pgsvr2 |

4.4 pause / resume

일시적으로 patroni가 PostgreSQL에 대한 유지 관리를 중지 하고, 수동으로 PostgreSQL에 대한 관리를 진행하고자 할 경우에 사용

다음의 예는 서버가 현재 동기화 문제가 발생하고 있는 상태에서 조치를 취하기 위하여 pause/resume 사용

| /usr/local/bin/patronictl -c /engine/patroni.yml list + Cluster: postgres (7321565912410233191) ----------+----+-----------+ | Member | Host | Role | State | TL | Lag in MB | +--------+---------------------+---------+----------+----+-----------+ | pgsvr1 | 192.168.56.201:5413 | Replica | starting | | unknown | | pgsvr2 | 192.168.56.202:5413 | Leader | running | 8 | | +--------+---------------------+---------+----------+----+-----------+ --pgsvr1이 동기화가 되고 있지 않는 상태 /usr/local/bin/patronictl -c /engine/patroni.yml pause postgres Success: cluster management is paused /usr/local/bin/patronictl -c /engine/patroni.yml list + Cluster: postgres (7321565912410233191) ----------+----+-----------+ | Member | Host | Role | State | TL | Lag in MB | +--------+---------------------+---------+----------+----+-----------+ | pgsvr1 | 192.168.56.201:5413 | Replica | starting | | unknown | | pgsvr2 | 192.168.56.202:5413 | Leader | running | 8 | | +--------+---------------------+---------+----------+----+-----------+ Maintenance mode: on -- 수동 관리 모드 이므로 문제가 있는 pgsvr1 서버에서 PostgreSQL 종료 후 $PGDATA삭제 ps -ef | grep postgres postgres 3507 3092 0 09:14 pts/0 00:00:07 /usr/bin/python3 /usr/local/bin/patroni /engine/patroni.yml postgres 4019 1 0 09:27 ? 00:00:00 postgres -D /data/pg13 --config-file=/data/pg13/postgresql.conf --listen_addresses=192.168.56.201 --port=5413 --cluster_name=postgres --wal_level=replica --hot_standby=on --max_connections=500 --max_wal_senders=8 --max_prepared_transactions=0 --max_locks_per_transaction=64 --track_commit_timestamp=off --max_replication_slots=10 --max_worker_processes=8 --wal_log_hints=on postgres 4021 4019 0 09:27 ? 00:00:00 postgres: postgres: logger postgres 4022 4019 0 09:27 ? 00:00:00 postgres: postgres: startup recovering 000000060000000000000003 pg_ctl stop -D $PGDATA -mf waiting for server to shut down.... done server stopped ps -ef | grep postgres postgres 3507 3092 0 09:14 pts/0 00:00:08 /usr/bin/python3 /usr/local/bin/patroni /engine/patroni.yml cd $PGDATA pwd /data/pg13 rm -rf * /usr/local/bin/patronictl -c /engine/patroni.yml list + Cluster: postgres (7321565912410233191) ---------+----+-----------+ | Member | Host | Role | State | TL | Lag in MB | +--------+---------------------+---------+---------+----+-----------+ | pgsvr1 | 192.168.56.201:5413 | Replica | stopped | | unknown | | pgsvr2 | 192.168.56.202:5413 | Leader | running | 8 | | +--------+---------------------+---------+---------+----+-----------+ Maintenance mode: on |

| /usr/local/bin/patronictl -c /engine/patroni.yml resume postgres Success: cluster management is resumed /usr/local/bin/patronictl -c /engine/patroni.yml list + Cluster: postgres (7321565912410233191) -----------+----+-----------+ | Member | Host | Role | State | TL | Lag in MB | +--------+---------------------+---------+-----------+----+-----------+ | pgsvr1 | 192.168.56.201:5413 | Replica | streaming | 8 | 0 | | pgsvr2 | 192.168.56.202:5413 | Leader | running | 8 | | +--------+---------------------+---------+-----------+----+-----------+ cd $PGDATA ls backup_label.old current_logfiles patroni.dynamic.json pg_hba.conf pg_ident.conf.backup pg_notify pg_snapshots pg_subtrans PG_VERSION postgresql.auto.conf postgresql.conf postmaster.pid backup_manifest global pg_commit_ts pg_hba.conf.backup pg_logical pg_replslot pg_stat pg_tblspc pg_wal postgresql.base.conf postgresql.conf.backup standby.signal base log pg_dynshmem pg_ident.conf pg_multixact pg_serial pg_stat_tmp pg_twophase pg_xact postgresql.base.conf.backup postmaster.opts ps -ef | grep postgres postgres 3507 3092 0 09:14 pts/0 00:00:10 /usr/bin/python3 /usr/local/bin/patroni /engine/patroni.yml postgres 5944 1 0 09:50 ? 00:00:00 postgres -D /data/pg13 --config-file=/data/pg13/postgresql.conf --listen_addresses=192.168.56.201 --port=5413 --cluster_name=postgres --wal_level=replica --hot_standby=on --max_connections=500 --max_wal_senders=8 --max_prepared_transactions=0 --max_locks_per_transaction=64 --track_commit_timestamp=off --max_replication_slots=10 --max_worker_processes=8 --wal_log_hints=on postgres 5946 5944 0 09:50 ? 00:00:00 postgres: postgres: logger postgres 5947 5944 0 09:50 ? 00:00:00 postgres: postgres: startup recovering 000000080000000000000006 postgres 5948 5944 0 09:50 ? 00:00:00 postgres: postgres: checkpointer postgres 5949 5944 0 09:50 ? 00:00:00 postgres: postgres: background writer postgres 5950 5944 0 09:50 ? 00:00:00 postgres: postgres: stats collector postgres 5951 5944 0 09:50 ? 00:00:01 postgres: postgres: walreceiver streaming 0/6000148 postgres 5960 5944 0 09:50 ? 00:00:00 postgres: postgres: postgres postgres 192.168.56.201(56398) idle postgres 5965 5944 0 09:51 ? 00:00:00 postgres: postgres: postgres postgres 192.168.56.201(56404) idle |

4.5 Patroni 테스트

4.5.1 replica 서버의 patroni 강제 종료

| /usr/local/bin/patronictl -c /engine/patroni.yml list + Cluster: postgres (7321565912410233191) -----------+----+-----------+ | Member | Host | Role | State | TL | Lag in MB | +--------+---------------------+---------+-----------+----+-----------+ | pgsvr1 | 192.168.56.201:5413 | Replica | streaming | 8 | 0 | | pgsvr2 | 192.168.56.202:5413 | Leader | running | 8 | | +--------+---------------------+---------+-----------+----+-----------+ 현재 192.168.56.201 서버가 replica 상태 [postgres@pgserver1 pg13]v13 $ ps -ef | grep patroni postgres 3507 3092 0 09:14 pts/0 00:00:18 /usr/bin/python3 /usr/local/bin/patroni /engine/patroni.yml [postgres@pgserver1 pg13]v13 $ kill -9 3507 [1]+ Killed /usr/local/bin/patroni /engine/patroni.yml > /engine/patroni.log 2>&1 (wd: ~) (wd now: /data/pg13) /usr/local/bin/patronictl -c /engine/patroni.yml list + Cluster: postgres (7321565912410233191) -----------+----+-----------+ | Member | Host | Role | State | TL | Lag in MB | +--------+---------------------+---------+-----------+----+-----------+ | pgsvr1 | 192.168.56.201:5413 | Replica | streaming | 8 | 0 | | pgsvr2 | 192.168.56.202:5413 | Leader | running | 8 | | +--------+---------------------+---------+-----------+----+-----------+ 상태 변화 없음 master에 접속해서 데이터 변경 발생 psql -h 192.168.56.202 -p 5413 -U postgres -W create database test2 ; postgres=# \l List of databases Name | Owner | Encoding | Collate | Ctype | Access privileges -----------+----------+----------+-------------+-------------+----------------------- postgres | postgres | UTF8 | en_US.UTF-8 | en_US.UTF-8 | template0 | postgres | UTF8 | en_US.UTF-8 | en_US.UTF-8 | =c/postgres + | | | | | postgres=CTc/postgres template1 | postgres | UTF8 | en_US.UTF-8 | en_US.UTF-8 | =c/postgres + | | | | | postgres=CTc/postgres test | postgres | UTF8 | en_US.UTF-8 | en_US.UTF-8 | test2 | postgres | UTF8 | en_US.UTF-8 | en_US.UTF-8 | (5 rows) replica 서버에 접속해서 변경사항이 반영 되었는지 확인 psql -h 192.168.56.201 -p 5413 -U postgres -W postgres=# \l List of databases Name | Owner | Encoding | Collate | Ctype | Access privileges -----------+----------+----------+-------------+-------------+----------------------- postgres | postgres | UTF8 | en_US.UTF-8 | en_US.UTF-8 | template0 | postgres | UTF8 | en_US.UTF-8 | en_US.UTF-8 | =c/postgres + | | | | | postgres=CTc/postgres template1 | postgres | UTF8 | en_US.UTF-8 | en_US.UTF-8 | =c/postgres + | | | | | postgres=CTc/postgres test | postgres | UTF8 | en_US.UTF-8 | en_US.UTF-8 | test2 | postgres | UTF8 | en_US.UTF-8 | en_US.UTF-8 | (5 rows) patroni 재 기동 /usr/local/bin/patroni /engine/patroni.yml > /engine/patroni.log 2>&1 & [1] 7247 /usr/local/bin/patronictl -c /engine/patroni.yml list + Cluster: postgres (7321565912410233191) -----------+----+-----------+ | Member | Host | Role | State | TL | Lag in MB | +--------+---------------------+---------+-----------+----+-----------+ | pgsvr1 | 192.168.56.201:5413 | Replica | streaming | 8 | 0 | | pgsvr2 | 192.168.56.202:5413 | Leader | running | 8 | | +--------+---------------------+---------+-----------+----+-----------+ role 변경 없음 |

4.5.2 Leader 서버의 patroni 강제 종료

| /usr/local/bin/patronictl -c /engine/patroni.yml list + Cluster: postgres (7321565912410233191) -----------+----+-----------+ | Member | Host | Role | State | TL | Lag in MB | +--------+---------------------+---------+-----------+----+-----------+ | pgsvr1 | 192.168.56.201:5413 | Replica | streaming | 8 | 0 | | pgsvr2 | 192.168.56.202:5413 | Leader | running | 8 | | +--------+---------------------+---------+-----------+----+-----------+ 192.168.56.202 서버에서 patroni 강제 종료 [postgres@pgserver2 pg_wal]v13 $ ps -ef | grep patroni postgres 3386 3013 0 09:14 pts/0 00:00:17 /usr/bin/python3 /usr/local/bin/patroni /engine/patroni.yml [postgres@pgserver2 pg_wal]v13 $ kill -9 3386 [1]+ Killed /usr/local/bin/patroni /engine/patroni.yml > /engine/patroni.log 2>&1 (wd: ~) (wd now: /data/pg13/pg_wal) [postgres@pgserver2 pg_wal]v13 $ ps -ef | grep postgres postgres 3500 1 0 09:18 ? 00:00:00 postgres -D /data/pg13 --config-file=/data/pg13/postgresql.conf --listen_addresses=192.168.56.202 --port=5413 --cluster_name=postgres --wal_level=replica --hot_standby=on --max_connections=500 --max_wal_senders=8 --max_prepared_transactions=0 --max_locks_per_transaction=64 --track_commit_timestamp=off --max_replication_slots=10 --max_worker_processes=8 --wal_log_hints=on postgres 3502 3500 0 09:18 ? 00:00:00 postgres: postgres: logger postgres 3506 3500 0 09:18 ? 00:00:00 postgres: postgres: checkpointer postgres 3507 3500 0 09:18 ? 00:00:00 postgres: postgres: background writer postgres 3508 3500 0 09:18 ? 00:00:00 postgres: postgres: stats collector postgres 4043 3500 0 09:27 ? 00:00:00 postgres: postgres: walwriter postgres 4044 3500 0 09:27 ? 00:00:00 postgres: postgres: autovacuum launcher postgres 4045 3500 0 09:27 ? 00:00:00 postgres: postgres: logical replication launcher /usr/local/bin/patronictl -c /engine/patroni.yml list + Cluster: postgres (7321565912410233191) -----------+----+-----------+ | Member | Host | Role | State | TL | Lag in MB | +--------+---------------------+---------+-----------+----+-----------+ | pgsvr1 | 192.168.56.201:5413 | Replica | streaming | 8 | 0 | | pgsvr2 | 192.168.56.202:5413 | Leader | running | 8 | | +--------+---------------------+---------+-----------+----+-----------+ /usr/local/bin/patronictl -c /engine/patroni.yml list + Cluster: postgres (7321565912410233191) --------+----+-----------+ | Member | Host | Role | State | TL | Lag in MB | +--------+---------------------+--------+---------+----+-----------+ | pgsvr1 | 192.168.56.201:5413 | Leader | running | 8 | | +--------+---------------------+--------+---------+----+-----------+ /usr/local/bin/patronictl -c /engine/patroni.yml list + Cluster: postgres (7321565912410233191) --------+----+-----------+ | Member | Host | Role | State | TL | Lag in MB | +--------+---------------------+--------+---------+----+-----------+ | pgsvr1 | 192.168.56.201:5413 | Leader | running | 9 | | +--------+---------------------+--------+---------+----+-----------+ 이 경우 기존 201 서버와 202서버 모두 단독 운영 되는 상태 (둘 다 Primary인 상태) 기존 leader(202) 서버에 데이터 변경 사항 발생 시킴 psql -h 192.168.56.202 -p 5413 -U postgres -W postgres=# \l List of databases Name | Owner | Encoding | Collate | Ctype | Access privileges -----------+----------+----------+-------------+-------------+----------------------- postgres | postgres | UTF8 | en_US.UTF-8 | en_US.UTF-8 | template0 | postgres | UTF8 | en_US.UTF-8 | en_US.UTF-8 | =c/postgres + | | | | | postgres=CTc/postgres template1 | postgres | UTF8 | en_US.UTF-8 | en_US.UTF-8 | =c/postgres + | | | | | postgres=CTc/postgres test | postgres | UTF8 | en_US.UTF-8 | en_US.UTF-8 | test2 | postgres | UTF8 | en_US.UTF-8 | en_US.UTF-8 | (5 rows) postgres=# create database shutdown_after_patroni ; postgres=# \c shutdown_after_patroni shutdown_after_patroni=# create table t1 (id serial , name varchar(10)) ; shutdown_after_patroni=# insert into t1 (name) values ('test') ; shutdown_after_patroni=# insert into t1 (name) values ('test') ; shutdown_after_patroni=# insert into t1 (name) values ('test') ; shutdown_after_patroni=# select count(*) from t1 ; count ------- 3 (1 row) 신규 leader(201) 서버에 데이터 변경 사항 발생 시킴 psql -h 192.168.56.201 -p 5413 -U postgres -W postgres=# \l List of databases Name | Owner | Encoding | Collate | Ctype | Access privileges -----------+----------+----------+-------------+-------------+----------------------- postgres | postgres | UTF8 | en_US.UTF-8 | en_US.UTF-8 | template0 | postgres | UTF8 | en_US.UTF-8 | en_US.UTF-8 | =c/postgres + | | | | | postgres=CTc/postgres template1 | postgres | UTF8 | en_US.UTF-8 | en_US.UTF-8 | =c/postgres + | | | | | postgres=CTc/postgres test | postgres | UTF8 | en_US.UTF-8 | en_US.UTF-8 | test2 | postgres | UTF8 | en_US.UTF-8 | en_US.UTF-8 | (5 rows) postgres=# create database test3 ; postgres=# \c test3 test3=# create table t2 (id serial , name varchar(10)) ; test3=# insert into t2(name) values ('201 server') ; test3=# insert into t2(name) values ('201 server') ; test3=# insert into t2(name) values ('201 server') ; test3=# insert into t2(name) values ('201 server') ; test3=# insert into t2(name) values ('201 server') ; test3=# insert into t2(name) values ('201 server') ; test3=# select count(*) from t2 ; count ------- 6 (1 row) patroni 재 기동 [postgres@pgserver2 pg13]v13 $ /usr/local/bin/patroni /engine/patroni.yml > /engine/patroni.log 2>&1 & [1] 6107 /usr/local/bin/patronictl -c /engine/patroni.yml list + Cluster: postgres (7321565912410233191) ---------+----+-----------+ | Member | Host | Role | State | TL | Lag in MB | +--------+---------------------+---------+---------+----+-----------+ | pgsvr1 | 192.168.56.201:5413 | Leader | running | 9 | | | pgsvr2 | 192.168.56.202:5413 | Replica | running | 9 | 0 | +--------+---------------------+---------+---------+----+-----------+ 202서버에 접속해서 기존 반영 되었던 shutdown_after_patroni 데이터베이스 체크 psql -h 192.168.56.202 -p 5413 -U postgres -W postgres=# \l List of databases Name | Owner | Encoding | Collate | Ctype | Access privileges -----------+----------+----------+-------------+-------------+----------------------- postgres | postgres | UTF8 | en_US.UTF-8 | en_US.UTF-8 | template0 | postgres | UTF8 | en_US.UTF-8 | en_US.UTF-8 | =c/postgres + | | | | | postgres=CTc/postgres template1 | postgres | UTF8 | en_US.UTF-8 | en_US.UTF-8 | =c/postgres + | | | | | postgres=CTc/postgres test | postgres | UTF8 | en_US.UTF-8 | en_US.UTF-8 | test2 | postgres | UTF8 | en_US.UTF-8 | en_US.UTF-8 | test3 | postgres | UTF8 | en_US.UTF-8 | en_US.UTF-8 | (6 rows) postgres=# \c test3 test3=# \dt List of relations Schema | Name | Type | Owner --------+------+-------+---------- public | t2 | table | postgres (1 row) test3=# select count(*) from t2 ; count ------- 6 (1 row) test3=# select * from t2 ; id | name ----+------------ 1 | 201 server 2 | 201 server 3 | 201 server 4 | 201 server 5 | 201 server 6 | 201 server (6 rows) |

4.5.3 Replica PostgreSQL 서버 Down

patroni가 자동으로 PostgreSQL 기동

| /usr/local/bin/patronictl -c /engine/patroni.yml list + Cluster: postgres (7321565912410233191) -----------+----+-----------+ | Member | Host | Role | State | TL | Lag in MB | +--------+---------------------+---------+-----------+----+-----------+ | pgsvr1 | 192.168.56.201:5413 | Leader | running | 9 | | | pgsvr2 | 192.168.56.202:5413 | Replica | streaming | 9 | 0 | +--------+---------------------+---------+-----------+----+-----------+ [postgres@pgserver2 pg13]v13 $ ps -ef | grep postgres postgres 6107 3013 0 11:21 pts/0 00:00:05 /usr/bin/python3 /usr/local/bin/patroni /engine/patroni.yml postgres 6157 1 0 11:21 ? 00:00:00 postgres -D /data/pg13 --config-file=/data/pg13/postgresql.conf --listen_addresses=192.168.56.202 --port=5413 --cluster_name=postgres --wal_level=replica --hot_standby=on --max_connections=500 --max_wal_senders=8 --max_prepared_transactions=0 --max_locks_per_transaction=64 --track_commit_timestamp=off --max_replication_slots=10 --max_worker_processes=8 --wal_log_hints=on postgres 6159 6157 0 11:21 ? 00:00:00 postgres: postgres: logger postgres 6160 6157 0 11:21 ? 00:00:00 postgres: postgres: startup recovering 000000090000000000000006 postgres 6161 6157 0 11:21 ? 00:00:00 postgres: postgres: checkpointer postgres 6162 6157 0 11:21 ? 00:00:00 postgres: postgres: background writer postgres 6163 6157 0 11:21 ? 00:00:00 postgres: postgres: stats collector postgres 6170 6157 0 11:21 ? 00:00:00 postgres: postgres: postgres postgres 192.168.56.202(53970) idle postgres 6174 6157 0 11:21 ? 00:00:08 postgres: postgres: walreceiver streaming 0/60330D8 [postgres@pgserver2 pg13]v13 $ kill -9 6157 [postgres@pgserver2 pg13]v13 $ ps -ef | grep postgres postgres 6107 3013 0 11:21 pts/0 00:00:05 /usr/bin/python3 /usr/local/bin/patroni /engine/patroni.yml postgres 6210 1 0 12:18 ? 00:00:00 postgres -D /data/pg13 --config-file=/data/pg13/postgresql.conf --listen_addresses=192.168.56.202 --port=5413 --cluster_name=postgres --wal_level=replica --hot_standby=on --max_connections=500 --max_wal_senders=8 --max_prepared_transactions=0 --max_locks_per_transaction=64 --track_commit_timestamp=off --max_replication_slots=10 --max_worker_processes=8 --wal_log_hints=on postgres 6212 6210 0 12:18 ? 00:00:00 postgres: postgres: logger postgres 6213 6210 0 12:18 ? 00:00:00 postgres: postgres: startup recovering 000000090000000000000006 postgres 6214 6210 0 12:18 ? 00:00:00 postgres: postgres: checkpointer postgres 6215 6210 0 12:18 ? 00:00:00 postgres: postgres: background writer postgres 6216 6210 0 12:18 ? 00:00:00 postgres: postgres: stats collector postgres 6217 6210 0 12:18 ? 00:00:00 postgres: postgres: walreceiver postgres 6222 6210 0 12:18 ? 00:00:00 postgres: postgres: postgres postgres 192.168.56.202(53994) idle |

4.5.4 Leader PostgreSQL 서버 Down

| /usr/local/bin/patronictl -c /engine/patroni.yml list + Cluster: postgres (7321565912410233191) -----------+----+-----------+ | Member | Host | Role | State | TL | Lag in MB | +--------+---------------------+---------+-----------+----+-----------+ | pgsvr1 | 192.168.56.201:5413 | Leader | running | 9 | | | pgsvr2 | 192.168.56.202:5413 | Replica | streaming | 9 | 0 | +--------+---------------------+---------+-----------+----+-----------+ [postgres@pgserver1 pg13]v13 $ ps -ef | grep postgres postgres 5944 1 0 09:50 ? 00:00:00 postgres -D /data/pg13 --config-file=/data/pg13/postgresql.conf --listen_addresses=19pared_transactions=0 --max_locks_per_transaction=64 --track_commit_timestamp=off --max_replication_slots=10 --max_worker_processes=8 postgres 5946 5944 0 09:50 ? 00:00:00 postgres: postgres: logger postgres 5948 5944 0 09:50 ? 00:00:00 postgres: postgres: checkpointer postgres 5949 5944 0 09:50 ? 00:00:00 postgres: postgres: background writer postgres 5950 5944 0 09:50 ? 00:00:00 postgres: postgres: stats collector postgres 7247 3092 0 10:59 pts/0 00:00:14 /usr/bin/python3 /usr/local/bin/patroni /engine/patroni.yml postgres 7256 5944 0 10:59 ? 00:00:00 postgres: postgres: postgres postgres 192.168.56.201(56424) idle postgres 7262 5944 0 10:59 ? 00:00:02 postgres: postgres: postgres postgres 192.168.56.201(56426) idle postgres 7394 5944 0 11:03 ? 00:00:00 postgres: postgres: walwriter postgres 7395 5944 0 11:03 ? 00:00:00 postgres: postgres: autovacuum launcher postgres 7396 5944 0 11:03 ? 00:00:00 postgres: postgres: logical replication launcher postgres 9141 5944 0 12:18 ? 00:00:00 postgres: postgres: walsender repl 192.168.56.202(40510) streaming 0/60330D8 [postgres@pgserver1 pg13]v13 $ kill -9 5944 /usr/local/bin/patronictl -c /engine/patroni.yml list + Cluster: postgres (7321565912410233191) -----------+----+-----------+ | Member | Host | Role | State | TL | Lag in MB | +--------+---------------------+---------+-----------+----+-----------+ | pgsvr1 | 192.168.56.201:5413 | Leader | running | 9 | | | pgsvr2 | 192.168.56.202:5413 | Replica | streaming | 9 | 0 | +--------+---------------------+---------+-----------+----+-----------+ .. 시간경과.. /usr/local/bin/patronictl -c /engine/patroni.yml list + Cluster: postgres (7321565912410233191) --------------+----+-----------+ | Member | Host | Role | State | TL | Lag in MB | +--------+---------------------+---------+--------------+----+-----------+ | pgsvr1 | 192.168.56.201:5413 | Replica | start failed | | unknown | | pgsvr2 | 192.168.56.202:5413 | Leader | running | 10 | | +--------+---------------------+---------+--------------+----+-----------+ 시간이 지나도 pgsvr1이 replica로 붙지 못해서 patroni log를 확인해 보면 다음과 같은 에러를 출력하고 있다 해당 시점의 pgsrv2 의 patroni log를 보면 pgsvr1번 서버의 패트로니로 부터 기동할 수 없다는 메세지가 온 즉시 promote를 진행한다 (약 1초 소요) 201서버의 patroni 재 기동 [postgres@pgserver1 pg13]v13 $ ps -ef | grep patroni postgres 7247 3092 0 10:59 pts/0 00:00:17 /usr/bin/python3 /usr/local/bin/patroni /engine/patroni.yml [postgres@pgserver1 pg13]v13 $ kill -9 7247 [1]+ Killed /usr/local/bin/patroni /engine/patroni.yml > /engine/patroni.log 2>&1 [postgres@pgserver1 pg13]v13 $ /usr/local/bin/patroni /engine/patroni.yml > /engine/patroni.log 2>&1 & [1] 9879 /usr/local/bin/patronictl -c /engine/patroni.yml list + Cluster: postgres (7321565912410233191) -----------+----+-----------+ | Member | Host | Role | State | TL | Lag in MB | +--------+---------------------+---------+-----------+----+-----------+ | pgsvr1 | 192.168.56.201:5413 | Replica | streaming | 10 | 0 | | pgsvr2 | 192.168.56.202:5413 | Leader | running | 10 | | +--------+---------------------+---------+-----------+----+-----------+ 다시 patroni의 로그를 확인해 보면 정상적으로 pg_rewind로 데이터를 동기화 했음을 확인 할 수 있다 |

다시 테스트 !!

이번에는 HammerDB로 tpch 스키마를 생성 시키면서 Leader PostgreSQL을 강제 종료

| /usr/local/bin/patronictl -c /engine/patroni.yml list + Cluster: postgres (7321565912410233191) -----------+----+-----------+ | Member | Host | Role | State | TL | Lag in MB | +--------+---------------------+---------+-----------+----+-----------+ | pgsvr1 | 192.168.56.201:5413 | Replica | streaming | 10 | 0 | | pgsvr2 | 192.168.56.202:5413 | Leader | running | 10 | | +--------+---------------------+---------+-----------+----+-----------+ [postgres@pgserver2 log]v13 $ ps -ef | grep postgres postgres 6107 3013 0 11:21 pts/0 00:00:11 /usr/bin/python3 /usr/local/bin/patroni /engine/patroni.yml postgres 6210 1 0 12:18 ? 00:00:00 postgres -D /data/pg13 --config-file=/data/pg13/postgresql.conf --listen_addresses=192.168.56.202 --port=5413 --cluster_name=postgres --wal_level=replica --hot_standby=on --max_connections=500 --max_wal_senders=8 --max_prepared_transactions=0 --max_locks_per_transaction=64 --track_commit_timestamp=off --max_replication_slots=10 --max_worker_processes=8 --wal_log_hints=on postgres 6212 6210 0 12:18 ? 00:00:00 postgres: postgres: logger postgres 6214 6210 0 12:18 ? 00:00:00 postgres: postgres: checkpointer postgres 6215 6210 0 12:18 ? 00:00:05 postgres: postgres: background writer postgres 6216 6210 0 12:18 ? 00:00:00 postgres: postgres: stats collector postgres 6222 6210 0 12:18 ? 00:00:00 postgres: postgres: postgres postgres 192.168.56.202(53994) idle postgres 6249 6210 0 12:26 ? 00:00:00 postgres: postgres: walwriter postgres 6250 6210 0 12:26 ? 00:00:00 postgres: postgres: autovacuum launcher postgres 6251 6210 0 12:26 ? 00:00:00 postgres: postgres: logical replication launcher postgres 6355 6210 0 12:37 ? 00:00:11 postgres: postgres: walsender repl 192.168.56.201(34654) streaming 0/A2B7B360 postgres 6457 6210 0 13:03 ? 00:00:00 postgres: postgres: postgres postgres 192.168.56.202(54042) idle postgres 6707 6210 9 13:09 ? 00:00:52 postgres: postgres: tpch tpch 192.168.56.1(56850) idle [postgres@pgserver2 log]v13 $ kill -9 6210 [postgres@pgserver2 log]v13 $ /usr/local/bin/patronictl -c /engine/patroni.yml list + Cluster: postgres (7321565912410233191) ---------+----+-----------+ | Member | Host | Role | State | TL | Lag in MB | +--------+---------------------+---------+---------+----+-----------+ | pgsvr1 | 192.168.56.201:5413 | Replica | running | 10 | 0 | | pgsvr2 | 192.168.56.202:5413 | Leader | crashed | | | +--------+---------------------+---------+---------+----+-----------+ [postgres@pgserver2 log]v13 $ /usr/local/bin/patronictl -c /engine/patroni.yml list + Cluster: postgres (7321565912410233191) ---------+----+-----------+ | Member | Host | Role | State | TL | Lag in MB | +--------+---------------------+---------+---------+----+-----------+ | pgsvr1 | 192.168.56.201:5413 | Replica | running | 10 | 0 | | pgsvr2 | 192.168.56.202:5413 | Leader | running | 10 | | +--------+---------------------+---------+---------+----+-----------+ Leader가 변경되지 않았다. 당시의 patroni log를 확인해 보면 PostgreSQL 서버가 죽었을 경우, Patroni가 빠르게 재 기동(13:18:06 --> 13:18:09)을 시키는데 정상적으로 재 기동이 될 경우 기존의 role 체인지는 없으나, 기동을 시키는데 정상 기동이 되지 않을 경우 role을 변경 한다는 것을 확인 할 수 있다 |

4.5.5 etcd가 종료 될 경우

patroni , postgreSQL 모두 정상 동작을 하고 있으나 etcd가 종료 된 경우. 현재의 구성은 etcd를 한대로 구성한 상태

| /usr/local/bin/patronictl -c /engine/patroni.yml list + Cluster: postgres (7321565912410233191) -----------+----+-----------+ | Member | Host | Role | State | TL | Lag in MB | +--------+---------------------+---------+-----------+----+-----------+ | pgsvr1 | 192.168.56.201:5413 | Replica | streaming | 10 | 0 | | pgsvr2 | 192.168.56.202:5413 | Leader | running | 10 | | +--------+---------------------+---------+-----------+----+-----------+ [root@pgserver3 ~]# ps -ef | grep etcd root 1483 1468 2 Jan09 pts/0 00:35:35 /engine/etcd-v3.5.11/etcd --config-file /engine/etcd-v3.5.11/etcd.yml [root@pgserver3 ~]# kill -9 1483 [1]+ Killed /engine/etcd-v3.5.11/etcd --config-file /engine/etcd-v3.5.11/etcd.yml > /engine/etcd-v3.5.11/etcd.log 2>&1 /usr/local/bin/patronictl -c /engine/patroni.yml list 2024-01-10 13:38:37,570 - WARNING - Retrying (Retry(total=1, connect=None, read=None, redirect=0, status=None)) after connection broken by 'NewConnectionError('<urllib3.connection.HTTPConnection object at 0x7f73e1536630>: Failed to establish a new connection: [Errno 111] Connection refused',)': /v2/machines 2024-01-10 13:38:37,571 - WARNING - Retrying (Retry(total=0, connect=None, read=None, redirect=0, status=None)) after connection broken by 'NewConnectionError('<urllib3.connection.HTTPConnection object at 0x7f73e15362b0>: Failed to establish a new connection: [Errno 111] Connection refused',)': /v2/machines 2024-01-10 13:38:37,572 - ERROR - Failed to get list of machines from http://192.168.56.203:2379/v2: MaxRetryError("HTTPConnectionPool(host='192.168.56.203', port=2379): Max retries exceeded with url: /v2/machines (Caused by NewConnectionError('<urllib3.connection.HTTPConnection object at 0x7f73e1536780>: Failed to establish a new connection: [Errno 111] Connection refused',))",) 2024-01-10 13:38:42,579 - WARNING - Retrying (Retry(total=1, connect=None, read=None, redirect=0, status=None)) after connection broken by 'NewConnectionError('<urllib3.connection.HTTPConnection object at 0x7f73e1536cf8>: Failed to establish a new connection: [Errno 111] Connection refused',)': /v2/machines 2024-01-10 13:38:42,580 - WARNING - Retrying (Retry(total=0, connect=None, read=None, redirect=0, status=None)) after connection broken by 'NewConnectionError('<urllib3.connection.HTTPConnection object at 0x7f73e1536c50>: Failed to establish a new connection: [Errno 111] Connection refused',)': /v2/machines 2024-01-10 13:38:42,581 - ERROR - Failed to get list of machines from http://192.168.56.203:2379/v2: MaxRetryError("HTTPConnectionPool(host='192.168.56.203', port=2379): Max retries exceeded with url: /v2/machines (Caused by NewConnectionError('<urllib3.connection.HTTPConnection object at 0x7f73e1536fd0>: Failed to establish a new connection: [Errno 111] Connection refused',))",) etcd와의 통신이 끊어지게 되므로 patroni가 정상 동작하지 못한다 PostgreSQL은 정상 동작하는지 확인 (Leader에 접속) [postgres@pgserver2 log]v13 $ psql -h 192.168.56.202 -p 5413 -U postgres -W postgres=# select * from pg_stat_replication ; pid | usesysid | usename | application_name | client_addr | client_hostname | client_port | backend_start | bac kend_xmin | state | sent_lsn | write_lsn | flush_lsn | replay_lsn | write_lag | flush_lag | replay_lag | sync_priority | syn c_state | reply_time ------+----------+---------+------------------+----------------+-----------------+-------------+-------------------------------+---- ----------+-----------+------------+------------+------------+------------+-----------+-----------+------------+---------------+---- --------+------------------------------- 7476 | 16384 | repl | pgsvr1 | 192.168.56.201 | | 34860 | 2024-01-10 13:37:56.066935+09 | | streaming | 0/A478EA78 | 0/A478EA78 | 0/A478EA78 | 0/A478EA78 | | | | 0 | asy nc | 2024-01-10 13:39:06.282104+09 (1 row) PostgreSQL은 정상 동작하는지 확인 (replica에 접속) [postgres@pgserver1 pg13]v13 $ psql -h 192.168.56.201 -p 5413 -U postgres -W postgres=# select * from pg_stat_wal_receiver ; pid | status | receive_start_lsn | receive_start_tli | written_lsn | flushed_lsn | received_tli | last_msg_send_time | last_msg_receipt_time | latest_end_lsn | latest_end_time | slot_name | sender_host | sender_port | conninfo -------+-----------+-------------------+-------------------+-------------+-------------+--------------+----------------------------- --+-------------------------------+----------------+-------------------------------+-----------+----------------+-------------+----- ------------------------------------------------------------------------------------------------------------------------------------ ------------------------------------------------------------------------------------------------------------------------------------ ----------------------- 11182 | streaming | 0/A4000000 | 10 | 0/A478EA78 | 0/A478EA78 | 10 | 2024-01-10 13:39:26.315423+0 9 | 2024-01-10 13:39:26.322137+09 | 0/A478EA78 | 2024-01-10 13:37:56.070323+09 | pgsvr1 | 192.168.56.202 | 5413 | user =repl passfile=/tmp/pgpass0 channel_binding=prefer dbname=replication host=192.168.56.202 port=5413 application_name=pgsvr1 fallback _application_name=postgres sslmode=prefer sslcompression=0 ssl_min_protocol_version=TLSv1.2 gssencmode=prefer krbsrvname=postgres ta rget_session_attrs=any (1 row) PostgreSQL은 정상 동작하고 있는 상태 이때 Leader PostgreSQL을 강제 종료 시키면 ? pgsvr2서버의 PostgreSQL을 kill 시켜 보자 [postgres@pgserver2 log]v13 $ ps -ef | grep postgres postgres 6107 3013 0 11:21 pts/0 00:00:15 /usr/bin/python3 /usr/local/bin/patroni /engine/patroni.yml postgres 7461 1 0 13:37 ? 00:00:00 postgres -D /data/pg13 --config-file=/data/pg13/postgresql.conf --listen_addresses=192.168.56.202 --port=5413 --cluster_name=postgres --wal_level=replica --hot_standby=on --max_connections=500 --max_wal_senders=8 --max_prepared_transactions=0 --max_locks_per_transaction=64 --track_commit_timestamp=off --max_replication_slots=10 --max_worker_processes=8 --wal_log_hints=on postgres 7463 7461 0 13:37 ? 00:00:00 postgres: postgres: logger postgres 7464 7461 0 13:37 ? 00:00:00 postgres: postgres: startup recovering 0000000A00000000000000A4 postgres 7465 7461 0 13:37 ? 00:00:00 postgres: postgres: checkpointer postgres 7466 7461 0 13:37 ? 00:00:00 postgres: postgres: background writer postgres 7467 7461 0 13:37 ? 00:00:00 postgres: postgres: stats collector postgres 7472 7461 0 13:37 ? 00:00:00 postgres: postgres: postgres postgres 192.168.56.202(54254) idle postgres 7474 7461 0 13:37 ? 00:00:00 postgres: postgres: postgres postgres 192.168.56.202(54256) idle postgres 7476 7461 0 13:37 ? 00:00:00 postgres: postgres: walsender repl 192.168.56.201(34860) streaming 0/A478EA78 [postgres@pgserver2 log]v13 $ kill -9 7461 [postgres@pgserver2 log]v13 $ ps -ef | grep postgres postgres 6107 3013 0 11:21 pts/0 00:00:16 /usr/bin/python3 /usr/local/bin/patroni /engine/patroni.yml [postgres@pgserver2 log]v13 $ ps -ef | grep postgres postgres 6107 3013 0 11:21 pts/0 00:00:16 /usr/bin/python3 /usr/local/bin/patroni /engine/patroni.yml patroni는 기동 되어 있지만, 종료된 PostgreSQL을 재 기동 시키지는 못한다 이때 pgsvr1서버의 상태는 ? 계속 Replica 상태 이다 [postgres@pgserver1 pg13]v13 $ psql -h 192.168.56.201 -p 5413 -U postgres -W postgres=# select pg_is_in_recovery() ; pg_is_in_recovery ------------------- t (1 row) etcd를 재 기동 시켜 보자!! (현재 Leader PostgreSQL은 Down된 상태이고, Patroni는 etcd와 통신이 안 되서 정상적인 동작을 하지 못하는 상태) [root@pgserver3 ~]# /engine/etcd-v3.5.11/etcd --config-file /engine/etcd-v3.5.11/etcd.yml > /engine/etcd-v3.5.11/etcd.log 2>&1 & [1] 2081 /usr/local/bin/patronictl -c /engine/patroni.yml list + Cluster: postgres (7321565912410233191) -----------+----+-----------+ | Member | Host | Role | State | TL | Lag in MB | +--------+---------------------+---------+-----------+----+-----------+ | pgsvr1 | 192.168.56.201:5413 | Replica | streaming | 11 | 0 | | pgsvr2 | 192.168.56.202:5413 | Leader | running | 11 | | +--------+---------------------+---------+-----------+----+-----------+ [postgres@pgserver2 log]v13 $ ps -ef | grep postgres postgres 6107 3013 0 11:21 pts/0 00:00:18 /usr/bin/python3 /usr/local/bin/patroni /engine/patroni.yml postgres 7967 1 0 13:52 ? 00:00:00 postgres -D /data/pg13 --config-file=/data/pg13/postgresql.conf --listen_addresses=192.168.56.202 --port=5413 --cluster_name=postgres --wal_level=replica --hot_standby=on --max_connections=500 --max_wal_senders=8 --max_prepared_transactions=0 --max_locks_per_transaction=64 --track_commit_timestamp=off --max_replication_slots=10 --max_worker_processes=8 --wal_log_hints=on postgres 7968 7967 0 13:52 ? 00:00:00 postgres: postgres: logger postgres 7974 7967 0 13:52 ? 00:00:00 postgres: postgres: checkpointer postgres 7975 7967 0 13:52 ? 00:00:00 postgres: postgres: background writer postgres 7976 7967 0 13:52 ? 00:00:00 postgres: postgres: stats collector postgres 7979 7967 0 13:52 ? 00:00:00 postgres: postgres: postgres postgres 192.168.56.202(55374) idle postgres 7984 7967 0 13:52 ? 00:00:00 postgres: postgres: walwriter postgres 7985 7967 0 13:52 ? 00:00:00 postgres: postgres: autovacuum launcher postgres 7986 7967 0 13:52 ? 00:00:00 postgres: postgres: logical replication launcher postgres 7990 7967 0 13:52 ? 00:00:00 postgres: postgres: postgres postgres 192.168.56.202(55378) idle postgres 7991 7967 0 13:52 ? 00:00:00 postgres: postgres: walsender repl 192.168.56.201(40830) streaming 0/A478EB90 Leader 서버는 변화되지 않았다. 그런데 TL이 변경된 것을 확인 할 수 있다. TL이 변화가 되었으면 나름의 promote로직이 돌았을 것으로 생각되어서 patroni log 파일을 확인해 본다 |

테스트 결론 :

Leader가 이상이 있을 경우 새로운 Replica를 Leader로 변경하는 대시 기존 Leader를 그대로 유지 하려고 한다

다만 기존 Leader에 문제가 심해서 기동이 안 되거나 할 경우 Replica중 하나를 leader로 변경하여 지속적인 서비스를 가능하게 한다

5. HAproxy

Application에서는 Primary가 어느 서버든지 상관하지 않고, 지속적인 서비스를 원할 경우 VIP를 설정해서 장애 발생시 VIP를 넘겨서 사용하거나, router 서버를 이용하여 Primary에 상관하지 않고

지속적인 서비스를 할 수 있다

HAproxy가 router 역할을 수행하면서 patroni와 통신하면서 leader 서버로 Application의 요청을 보내 주는 역할을 한다

| yum install -y haproxy which haproxy /usr/sbin/haproxy haproxy -v HA-Proxy version 1.5.18 2016/05/10 Copyright 2000-2016 Willy Tarreau <willy@haproxy.org> |

| echo "global maxconn 200 defaults log global mode tcp retries 2 timeout client 30m timeout connect 4s timeout server 30m timeout check 5s listen stats mode http bind *:7000 stats enable stats uri / listen postgres bind *:5413 option httpchk http-check expect status 200 default-server inter 3s fall 3 rise 2 on-marked-down shutdown-sessions server postgresql_db-node-1_5413 192.168.56.201:5413 maxconn 200 check port 8008 server postgresql_db-node-2_5413 192.168.56.202:5413 maxconn 200 check port 8008" > /etc/haproxy/haproxy.cfg listen stats : HAproxy 서버에 대한 상태 체크 가능 http://ip:7000 listen postgres : Application에 대한 접속을 받아서 해당 시점의 Leader노드의 PostgreSQL로 연결 server postgresql_[db-node-1_5413] : 서버 이름 지정 192.168.56.201:5413 : PostgeSQL 서버 아이피:포트 maxconn 200 : 해당 PostgreSQL 서버의 max_connection 값과 일치 시킴 check port 8008 : 해당 서버의 patroni restapi port |

| systemctl restart haproxy systemctl status haproxy netstat -tunelp | grep LISTEN tcp 0 0 192.168.56.203:2379 0.0.0.0:* LISTEN 0 24473 2320/etcd tcp 0 0 127.0.0.1:2379 0.0.0.0:* LISTEN 0 24472 2320/etcd tcp 0 0 192.168.56.203:2380 0.0.0.0:* LISTEN 0 24471 2320/etcd tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 0 15932 967/sshd tcp 0 0 0.0.0.0:7000 0.0.0.0:* LISTEN 0 25950 2483/haproxy tcp 0 0 127.0.0.1:7001 0.0.0.0:* LISTEN 0 24470 2320/etcd tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN 0 19650 1145/master tcp 0 0 0.0.0.0:5413 0.0.0.0:* LISTEN 0 25951 2483/haproxy tcp6 0 0 :::22 :::* LISTEN 0 15934 967/sshd tcp6 0 0 ::1:25 :::* LISTEN 0 19651 1145/master |

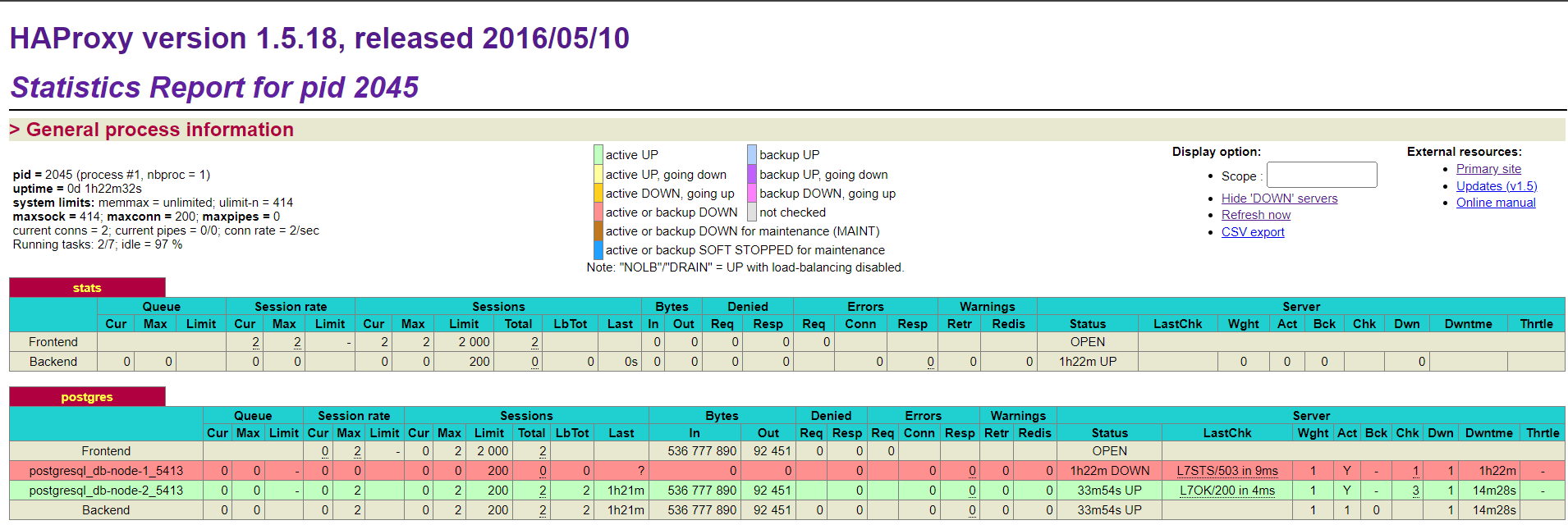

HAproxy 정보 확인 (http://haproxy_server_ip:7000)

Client는 haproxy_server_ip 5413포트를 이용하여 접속

| psql -h 192.168.56.203 -p 5413 -U postgres -W Password: psql (13.11) Type "help" for help. postgres=# select * from pg_stat_replication ; pid | usesysid | usename | application_name | client_addr | client_hostname | client_port | backend_start | bac kend_xmin | state | sent_lsn | write_lsn | flush_lsn | replay_lsn | write_lag | flush_lag | replay_lag | sync_priority | syn c_state | reply_time ------+----------+---------+------------------+----------------+-----------------+-------------+-------------------------------+---- ----------+-----------+------------+------------+------------+------------+-----------+-----------+------------+---------------+---- --------+------------------------------- 7991 | 16384 | repl | pgsvr1 | 192.168.56.201 | | 40830 | 2024-01-10 13:52:28.603568+09 | | streaming | 0/A478EB90 | 0/A478EB90 | 0/A478EB90 | 0/A478EB90 | | | | 0 | asy nc | 2024-01-10 14:28:14.514275+09 (1 row) |

6. 종료 / 기동

종료

HAproxy 종료 --> Replica 서버의 patroni --> Replica 서버의 PostgreSQL --> Leader 서버의 Patroni --> Leader 서버의 PostgreSQL --> etcd 클러스터의 Leader가 아닌 서버 --> etcd 클러스터의 Leader

기동

etcd 기동 (기동 시 알아서 Leader선출) --> leader 서버의 patroni --> replica 서버의 patroni --> HAproxy

덧, 기존에는 oneNote 붙여 넣기가 oneNote랑 똑같이 잘 붙여 넣어 져서 가독성이 좋았었는데

이제는 제대로 붙여 넣어 지지가 않아서 포스팅 하기가 귀찮아지네요 ...